How to… Plan your Prep

So you know your data isn’t right for the purpose you need it to be; but what do you do about it? The next stage in this process after this realisation is key in developing a solution to your challenge but how do you approach this next step when all you see are the issues in front of you?

Our approach to this challenge is a staged approach that should help you plan, define the outcome and provide a framework of steps to solve your challenges.

To cover this, let’s talk through an example data set, but keep it simple. Here’s some sales data from Chin & Beard Suds Co (a fake retailer that sells soap).

Stage 1 - Know Your Data

Without understanding your dataset as it currently stands, you will not be able to deliver the results you need. Sometimes in small datasets, this understanding can be very easy to form. Here’s what to look for in small datasets:

Columns, rows and crosstabs - how is the data structured?

Two columns of dimensions with each other column a month of values

Headers and fields - are they all there as expected?

Month Headers should ideally be one column with a value alongside

Data Types - what type of data exists in each field?

Two string values but then each subsequent column is a value

Granularity of Rows - what does each row represent?

Each row is a different product sold in a store

Nulls - are there any?

None present so can disregard this factor for this example

We often find that a quick sketch of the table will help you think through what is going on in any aspect of your table and answer the questions above. Here's how we'd sketch the data source above:

By identifying categorical data and the fields that contain the values you will want for your analysis, you will understand: how complete the data set is, why it isn't ready for analysis yet and what it might take to prepare the data. Notice, it's not vital that everything is captured. By simplifying the sketch, you start to focus on the core issues rather than drowning in the details.

Stage 2 - The Desired State

As you become more experienced in producing your own data sets, this stage becomes a lot easier and almost a second nature. You will be able to look at a data set and know what the desired outcome should be. Whilst you are learning that, this sometimes feels difficult to picture - so sketch it! More on that shortly!

So what should your Desired State be?

For most modern data analysis tools, or visualisation software, you will need to structure your data into columns that have a name for that data in the first row. Each subsequent row should be a record for an individual recording / instance. Most tools will require a single input table so all of your data fields you need for your analysis should be in this single table.

The sketch - how to form it

For simple data sets this can be very easy to just doodle out. For wide data sets with lots of columns, this stage can become a bigger task. Start by listing off your categorical data. These are things that you will analyse the numbers by, for example, region, customer, product or course. For each unique combination of these categorical data fields will set the granularity of your Desired State data set. In the example dataset, the combination of Branch, Product and Date will set the value being recorded.

Secondly, you can now add a column for each of the measures you may want to analyse. At this point you will also want to consider values that might help the analytical tools’ performance. Maybe adding a ranking, market share or running total at this stage will help make your dataset more accessible to novice users but also performant to complete your analysis faster.

Returning to our example, here's what we need to output to complete our analysis:

The Categorical data fields will be Branch, Product and Date. Note how capturing the data type here is useful too. The output will only have one field for analysis - a simple sales value.

Stage 3 - From KYD to the Desired State

Take your hands away from the keyboard… for this stage you won’t need a computer, just your brain. By looking at the original data and having understood what you want the data to be, you will start to see some of the transitions you will need to take to clean, pivot, join and aggregate the data as required.

Start by making a list of the transitions you think you will need to make. You may not end up doing them all but you’re not building the workflow yet so it doesn’t matter. Here are some of the questions we ask at this stage:

Columns

Too many? Filter unnecessary fields.

Too few? Maybe Join a secondary data set.

Clean Field Names? If not, you will need to amend these.

Calculations needed? If you have all the data fields you need to form the new columns then calculations will be required. By completing your calculations in your Preparation tool, your analysis tool will require less computing power to process the formation of the data.

Rows

Too many? Filter out unnecessary rows. Aggregate the data to be less granular.

Too few? Transpose columns to create more. Add an additional data set through unioning or join a data scaffold.

Clean records? Clumped data? Punctuation where there shouldn’t be any? You will need to handle these different challenges but take note of each change as they will likely be separate data prep steps

Blanks? Should they be there? Will need to filter them if not or find a way to fill the gaps otherwise.

Multiple data sources

Join together to add more columns

Union together to add more rows

Let's return to our example and see how this works for this use case:

Very quickly we can spot the challenges by taking a few quick notes on my sketch of 'frame' of the data. You might not spot all of the challenges but this stage just gets you thinking about what you have to work with. Here we can see:

We have two categorical data fields (Branch and Product).

The rest of the columns we have are all headed by dates and have the sales values inside.

The Date needs to be pivoted to form our third categorical field. This will change the number of rows of data we have in the data set as we will get an additional row per each month, branch and product combination.

The pivoting process will create a column of values.

Punctuation replacing the space in the Branch and Product fields will need to be removed.

For the rows of data, the analysis requires a row per unique combination of branch (town), product and month. This means we will need to:

Aggregate the Lewisham_1 and Lewisham_2 sales together to form the data at the correct granularity. This will also change the number of rows we may have in the Desired State data set compared to the Original Data set.

Here's some other ideas on what other steps we also might need to take:

Rename fields

Change data types of fields

Stage 4 - Build It

Ok, you can use the mouse and keyboard now to build out each of those steps. Where do you start? Well making a basic step-by-step plan would be a good way to go. With Tableau Prep, you can quickly change the order of the transitions or add forgotten ones to go from the original data to the Desired State. You might not get the workflow right first time but you will be a lot closer for having planned out these steps.

Also, you might not know what tool, transition or calculation to use to make the change you require, but you will be able to take a step back to rethink the problem and not get lost from the next steps when you do so.

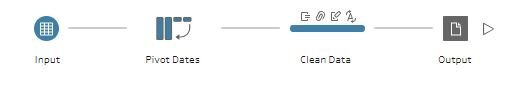

So let's complete our example. With all the steps captured above, I used Tableau Prep Builder to create this workflow from Inputing the data, to Outputing the file as a csv. The first step (icon) in the image below is the 'Input' step where the data is being brought into Prep for processing. The second icon. is pivoting multiple columns to rows of data instead. This is where all of the different dates are being converted into one column with another column alongside holding the respective value for that date, branch and product.

Although in Tableau Prep Builder, the clean step (the third blue icon) actually contains a lot of detail that is captured in the tools 'Changes pane'. The image below shows not only the steps taken but also what order they occur in:

Created a calculation called 'Product' that changes 'Liquid-Soap' to 'Liquid and 'Soap-Bar' to 'Bar'.

Grouped together the two Lewisham stores

Change the 'Pivot1 Names' column to 'Date'

Change the data type of the 'Date' to be a date

Rename the 'Pivot1 Values' field to 'Sales'

This leaves us with a nice clean dataset ready for analysis when outputted in the fourth and final step:

Click here to access all the files used if you want to have a go at the exercise yourself.